Understanding your Results

Waldo’s new results studio makes it easier than ever to understand your test results. The Results Studio allows you to watch test playback recordings, view expected and recorded screenshots side by side, and see a full breakdown of your entire test in the timeline.

The Results Studio

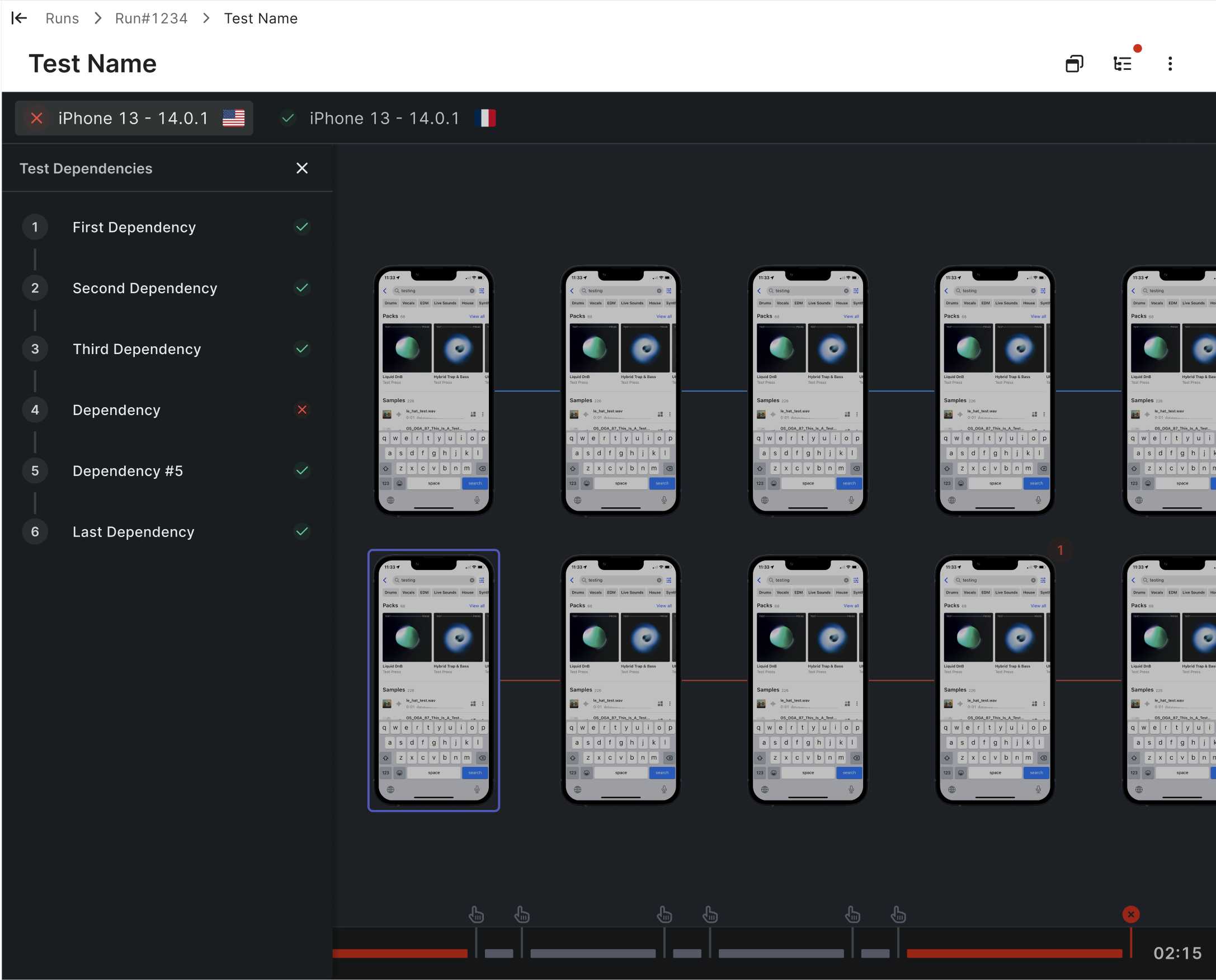

Waldo's results studio is broken up into 4 parts. The playback panel, the step comparison view, the timeline, and the dependency inspector.

Playback Panel: The test playback panel (large iPhone 13 in screenshot) displays a video recording of your test.

Step Comparison View: The step comparison view (2 rows of iPhones) shows screenshots taken from your actual results (bottom) and your expected results (top). The purple highlight indicates the step within the expected flow that corresponds to the current recording playback position.

Timeline: The timeline shows an event-based breakdown of your test. The bars represent the time your application takes to pass the assertions set within a given step, and the icons represent the interactions that move your app into the next step of the test.

Dependency Inspector: The dependency panel shows the dependencies and their status for a given test. The dependency icon in the top-right action menu will display a red badge when dependencies of a given test are failing. Click on any dependency to inspect it in the Results Studio.

Inspecting your results

Each test within a run completes with a status. Regardless of the replay status, there are a few tools you can use to understand your results.

- Inspect dependencies: Before addressing errors within a given test, ensure that there are no errors within the test's dependencies that are affecting your results.

- Watch the recording: Clicking the 'play' button will display a video recording of your test running at actual speed. This can help you identify issues that are difficult to catch using the static screenshots Waldo provides in the Step Comparison View.

- Download logs and/or crash report: Logs are generated on the device as your test runs and include only entries generated by your app from the time it is launched. Logs for the test's dependencies are included as well.

Replay Statuses

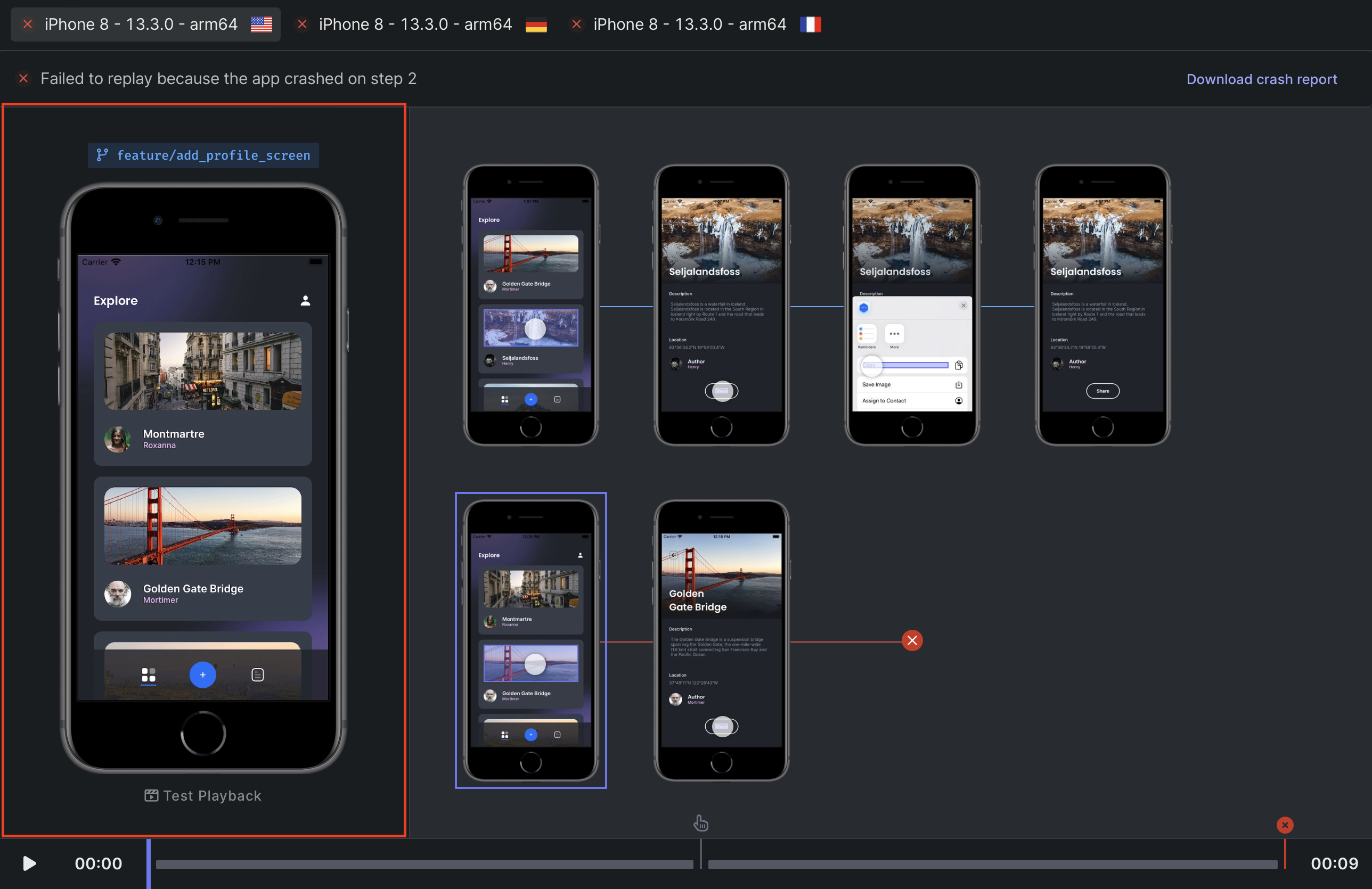

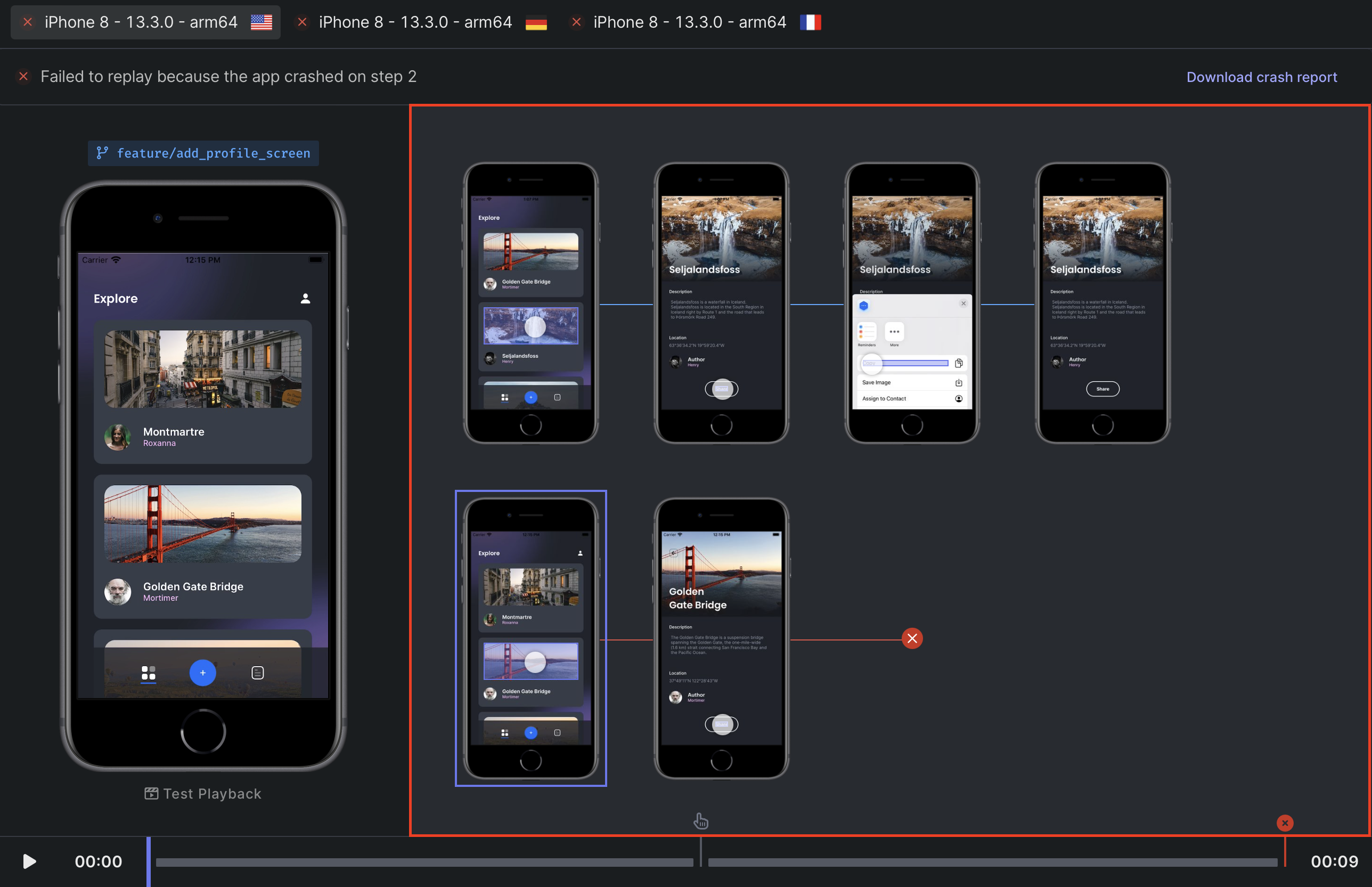

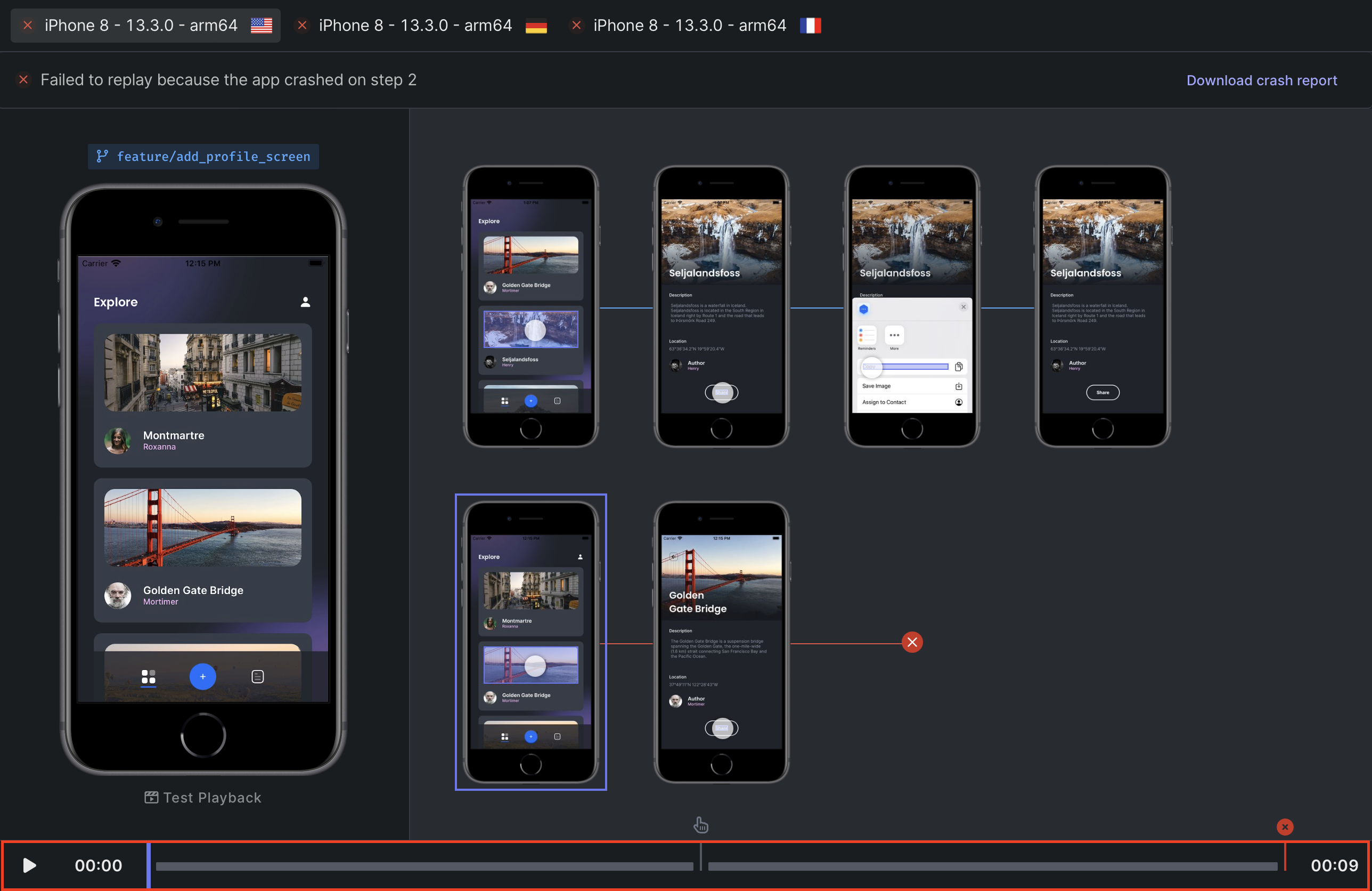

App Crash

If your test fails to replay on a device configuration because your app crashed, Waldo indicates exactly where in the flow the crash occurred and what interaction caused it.

What can you do?

- Download the system logs and/or crash report to inspect what caused your app to crash (on Android the crash report is included with the system log)

- Try reproducing the issue locally Click on the

Buildstab and download the corresponding version.

Cannot replay

If your test fails to replay on a device configuration because of the current context, you should examine the replay to help you understand the failure. Typically, the failure is for one the following reasons:

- Your app’s behavior has changed.

- There is a bug in your app.

- It is the first time Waldo has replayed on that device configuration, and Waldo is not able to automatically determine how to correctly replay the flow there.

What can you do?

If your app is simply not behaving properly, you can use the same approach as discussed previously under “App crashed” to diagnose the root cause of the problem.

If your app has changed, or Waldo cannot determine how to proceed on one step of the flow, or both, you can simply select Review. This will take you to the step Overview where you have multiple options:

- You can directly fix the interaction in the details of the step (See Fixing Interactions for more details). This provides Waldo with another reference recording of your flow under the new conditions. Waldo is smart about which recording to use for each condition.

- If the is more complex than that, you can always update your test. Simply click on the

re-recordbutton and you will be redirected to the recorder to update your flow from this specific step.

Assertions failed

Even if your test replays successfully, Waldo may indicate one or more assertions that do not pass validation.

What can you do?

As in the case with “Cannot replay” discussed previously, you should first determine whether the failed assertion indicates an actual problem (the app is not behaving properly), or that the assertion needs to be updated (or removed entirely).

If you determine that the assertion is outdated, you can click on the Fix assertions button and either:

Fix assertionMake sure it now uses a new value. For example, if a button now says “Sign up” instead of “Register”, and that is the expected behavior, you can simply update the value of the text assertion attached to it.(delete)Sometimes an assertion is no longer useful. If you delete it by mistake, you can simply go back to the test definition page and add it again.

Note: when you fix an assertion, it is evaluated in the future against the new state of the flow (the screen on the right-hand side). In particular, in the case of a similarity assertion, the new threshold that you set is compared in the future against that right-hand screen.

For a step-by-step guide visit Fixing Assertions

Flaky tests

A “flaky” test is one that yields different outcomes when played multiple times.

In order to detect those, we always retry whenever we see any kind of error when replaying a test:

- if the retry has the exact same result, we can categorize the test as either "crash", "error" or "cannot replay".

- if the retry does NOT have the same result, we categorize the test as flaky.

What can you do?

As you can see, for flaky tests we show a toggle in the banner to see the different outcomes that happened.

The first step is to play with this to determine exactly what caused the flakiness.

It will generally be for one of the 2 reasons:

- your app is indeed flaky: for instance you have some A/B testing, or you got a network issue during one of the API calls. In that case, there is nothing to change in Waldo.

- or the recording can be fine-tuned. It will usually be a matter of letting Waldo know better what to expect on the screen.

There are 2 very common scenarios for the latter:

- Sometimes you might have added assertions on properties that are NOT deterministic. For instance, a pixel perfect assertion on a box that has a random background color. In that case, simply deleting the assertion will get rid of the flakiness.

- Or Waldo was not able to determine properly WHEN to do the interaction on screen (the infamous “wait problem”). For instance, let us imagine that your app allows the user to upload a photo, displays the photo as it is uploading, and, once the upload completes, allows the user to tap on the photo to navigate to another screen. During recording, everything works correctly. Upon replay, if the timing is off, the tap occurs too soon on the photo and nothing happens because the upload is not finished. To force Waldo to wait longer on that particular screen, you can simply update the time limit (See Time Limit for more details). An other solution to this problem is to add an assertion on any element that will only appear after uploading completes (perhaps a checked checkbox, or some informative text).

See Assertions to learn more.

So in short, if you see that the replay engine is not doing exactly what you expect, you can:

- remove the assertion: when it is testing something not deterministic

- add an assertion to enforce more conditions to be met for this screen: usually when Waldo did not wait long enough and all existing assertions on the screen passed.

- adjust the time limit: if some assertions failed on that screen, but would have succeeded if waiting longer

Dependency error

This status means that one of the dependencies could not be replayed properly. Thus Waldo was not even able to reach the point of attempting the replay of that particular test.

What can you do?

There is not much to do on the test itself. Instead, you should see how to fix the dependency test directly.

In some very rare cases, you might see a test in a dependency error status even if all other tests are passing. This can happen if one of the early tests is flaky. Consider this situation:

Sign Up->Create MessageSign Up->Go to Settings

To play Create Message and Go to Settings, Waldo has to play the test Sign Up twice. Now imagine if Sign Up is flaky and passes 50% of the time: you might end up with Sign Up as a success (it passed when replaying Create Message), and Create Message as a success (it passed after Sign Up), but Go to Settings has a dependency error because when we attempted to replay Sign Up the second time, there was an issue.

Recording a strong test suite early on is essential to ensuring your dependencies succeed in future runs.

App non-responsive

This status means that the application is repeatedly blocking the main thread.

You download logs to find out which interaction caused it.

App failure

If we could not replay the test in Waldo for some network reasons (on our side), you will get an App failure error. It can usually be resolved by retrying the replay.

Updated 4 months ago